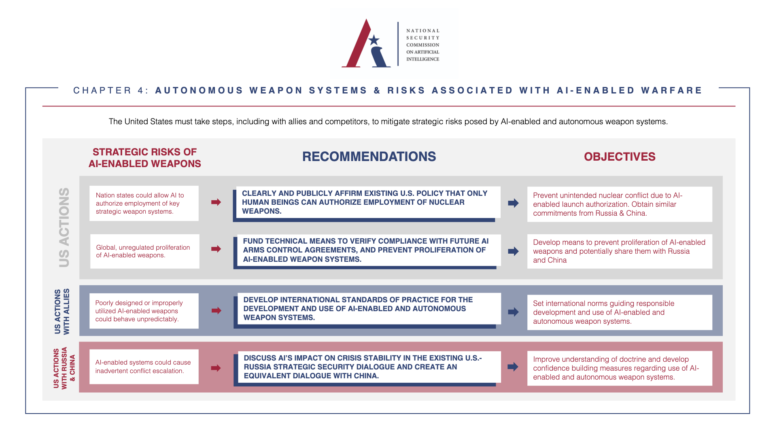

The U.S. NSCAI (National Security Commission on Artificial Intelligence) has issued a report whose recommendations call for important unilateral limitations on the military use of artificial intelligence as well as multilateral cooperation on A.I. safety with China and Russia. The report clearly states that the U.S. will never place A.I. in control of nuclear weapons, explaining that “[it is already] existing U.S. policy that only human beings can authorize employment of nuclear weapons.”

The full 752-page NSCAI report, issued March 1, 2021, includes the following language:

“ “ “

Clearly and publicly affirm existing U.S. policy that only human beings can authorize employment of nuclear weapons, and seek similar commitments from Russia and China. The United States should make a clear, public statement that decisions to authorize nuclear weapons employment must only be made by humans, not by an AI-enabled or autonomous system, and should include such an affirmation in the DoD’s next Nuclear Posture Review. This would cement and highlight existing U.S. policy, which states that “the decision to employ nuclear weapons requires the explicit authorization of the President of the United States.” It would also demonstrate a practical U.S. commitment to employing AI and autonomous functions in a responsible manner, limiting irresponsible capabilities, and preventing AI systems from escalating conflicts in dangerous ways.

…

Discuss AI’s impact on crisis stability in the existing U.S.-Russia Strategic Security Dialogue (SSD) and create an equivalent meaningful dialogue with China. The Departments of State and Defense should discuss AI’s impact on crisis stability within the existing U.S.-Russia SSD and create an equivalent meaningful dialogue with China. … The United States should use this channel to highlight how deploying unsafe systems could risk inadvertent conflict escalation, emphasize the need to conduct rigorous TEVV [test, evaluation, validation, and verification], and discuss where each side sees risks of a conventional conflict rapidly escalating in order to better anticipate future responses in a crisis. These dialogues could also plant the seeds for a future, standing dialogue exclusively focused on establishing practical and concrete confidence building measures surrounding AI-enabled and autonomous weapon systems. For instance, the United States, Russia, and China could work to develop an “international autonomous incidents agreement,” modeled after the 1972 Incidents at Sea Agreement, which would seek to define the “rules of the road” for behavior of autonomous military systems to create a more predictable operating environment and avoid accidents and miscalculations. They could also agree to integrate “automated escalation tripwires” into systems that would prevent the automated escalation of conflict in specific scenarios without human intervention, to include nuclear weapons employment as noted above.

…

Pursue technical means to verify compliance with future arms control agreements pertaining to AI-enabled weapon systems. The United States should actively pursue the development of technologies and strategies that could enable effective and secure verification of future arms control agreements involving uses of AI technologies.

…

Fund research on technical means to prevent proliferation of AI-enabled and autonomous weapon systems. Controlling the proliferation of AI-enabled and autonomous weapon systems poses significant challenges given the open-source, dual-use, and inherently transmissible nature of AI algorithms. The proliferation of makeshift autonomous weapon systems which primarily utilize commercial components will be particularly difficult to control via regulation and will necessitate capable intelligence sharing and domestic law enforcement efforts to prevent their use by terrorists and other non-state actors. Regarding more sophisticated autonomous weapon systems, the United States should double down on efforts to design and incorporate proliferation-resistant features, such as standardized ways to prevent unauthorized users from utilizing such weapons, or reprogramming a system’s functionality by changing key system parameters. DoD and DoE should fund technical research on such methods, and if appropriate, these methods could be shared with Russia and China, or potentially other countries, to prevent the proliferation or loss of control of certain AI-enabled autonomous weapon systems.

” ” ”

MEDIA CONTACT

Company- NeuroBinder, Inc.

Contact Person- Jeremy McHugh

Email- jeremy@neurobinder.com

Country- USA

Website- https://neurobinder.com